Monitoring and evaluating complex systems

In the majority of our projects, we support clients to monitor, evaluate and make evidence-based decisions on complex interventions or interventions engaging with complex systems – including climate change, pandemics, migration and conflict. Most of the issues our partners and clients work on fall into the ‘systemic problems’ category, with multi-faceted causes and whose solutions require changes at the individual, community, society, organisational, government, policy, and global levels.

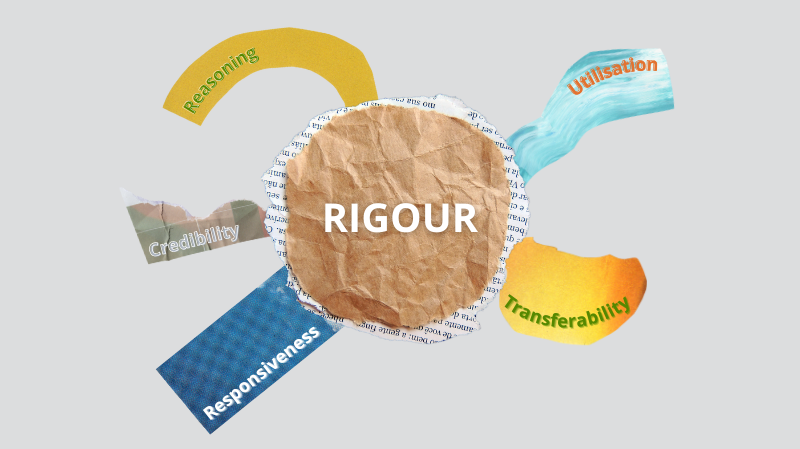

Embracing complexity in evaluation requires acknowledging that a single approach, applied in a standardised way, is rarely appropriate to fulfil the evaluation needs of large, learning-oriented programmes. It also requires a new definition of rigour, to take into account “the special conditions of each inquiry” and unpick social, political, economic and environmental processes of change.

Intentional methodological recombination

In the real world of evaluation, faced with multiple priorities and constraints, evaluators often combine the component parts of certain methods to bolster the weaknesses of others. They also skip, substitute, and repurpose steps and tools of evaluation approaches to suit their purposes. This is referred to as methodological bricolage.

Yet, while guidance for choosing appropriate methods abounds, it generally focuses on choosing between different methods, rather than on combining them. A recent Practice Paper from the Centre for Development Impact offers a framework to support evaluators to intentionally combine methods more rigorously and effectively.

Advancing understandings and use of bricolage

As we celebrate 10 years of the Centre for Development Impact (CDI), we are excited to launch a series of actions on the practice of bricolage. Throughout 2023 and 2024, we invite you to reflect with core CDI partners Itad, the Institute of Development Studies and the University of East Anglia, and our broader community of practice, on the ways in which evaluators are combining methods to design and implement credible and useful evaluations.

- On 3 October, as part of the 2023 UK Evaluation Society Conference, Marina Apgar (Institute of Development Studies), Tom Aston (independent consultant), Giovanna Voltolina (Itad) and Melanie Punton (Itad) will use the framework for bricolage from the CDI Practice Paper and reflect on our Adolescent 360 Process Evaluation and Fleming Fund evaluation experiences with ensuring rigour in bricolage.

- During the 2023 American Evaluation Association Conference Marina Apgar and colleagues from the Inclusive Rigour network will explore experiences with bricolage through combining narrative and participatory methods in the practice of inclusive rigour.

- In a series of blog posts, the authors of earlier CDI Practice Papers will look back and reflect on how they adapted or combined their methods in their evaluation practice.

Do you have experience with methodological bricolage that you’d like to share? We’d love to hear from you so please get in touch.