Lots has been written about what can be achieved using a market systems or M4P approach, and similarly there also appears to be a consensus among practitioners that the approach can yield important benefits for poor people. However, robust evaluation evidence that actually demonstrates impact is thinner on the ground.

There are relatively few examples of rigorous impact evaluations of market systems programmes, and debates continue over which evaluation methods are appropriate. Previous research has highlighted shortcomings of evaluations: in assessing whether programmes led to systemic change, the limited attention paid to unintended or negative impacts, and weaknesses in how qualitative and quantitative data is used.

It is not surprising that it has taken time to develop an adequate evaluation approach. The complexity of market systems, the fact that programmes aim to facilitate rather than directly deliver change, and operational difficulties in carrying out field work has meant that designing and implementing an evaluation is not straightforward.

BEAM’s Evaluation Guidance sets out to help evaluators, programme managers and donors steer round these and other problems. Key recommendations from the Evaluation Guidance include:

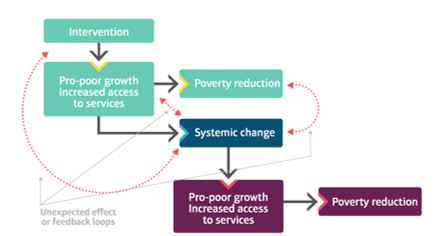

1. Use a theory-based approach for the evaluation. Programmes aim to promote change in different parts of a market system. Identifying where and how impacts are being achieved means focusing on causal chains, and identifying how and why interventions work in a particular context. Theory-based evaluation provides the basis for doing this.

2. Use a mixture of methods, from different sources. A theory-based evaluation for a market systems programme will typically need to employ a range of different methods to assess what has changed, and to draw conclusions supported by evidence from different (triangulated) sources.

3. Breaking a programme down into component parts, and understanding the different ways that interventions achieve impacts can help determine which methods will be useful. Where the impact of a single intervention can be isolated from the effects on the rest of the programme, it may be possible to use experimental or quasi-experimental methods. These quantify and attribute impacts to a programme using a treatment group and a control group (as Samarth NMDP did for an intervention to boost ginger production in Nepal).

The Evaluation Guidance explains how conceptualising change in an intervention can help with the choice of methods

4. Experimental methods will not be enough on their own however (see point 2), and are less likely to work where different projects focusing on different elements of the market system have been implemented as an integrated programme. In this case it will be harder (or impossible) to separate out and quantify the impact of a single intervention.

5. Evaluations will need to place a strong emphasis on the principle of contribution. Programmes only facilitate change, so the behaviour of other actors will also make a significant difference to outcomes. In these instances, a design which assesses the contribution of a programme (along with other causes) will be necessary, using methods such as contribution analysis, as employed in the evaluation of a financial sector programme in Kenya.

6. Focus on systemic change. Evaluations need to focus on the extent to which programmes have changed the way the market system works. Research and debate continue on how best to characterise and measure systemic market change. However, a useful starting point is to assess ‘imitation’ and ‘buy-in’ indicators. Another is to focus on scale (how many people benefitted?), sustainability (will benefits accrue once the intervention ends?) and resilience (do market actors have the ability to adapt to face future challenges?).

7. A variety of perspectives are required to understand what is happening in a system. Producers, traders, extension agents, and government officials play different roles in a market system. Each will have different views on what is changing, and who is benefitting.

8. Has a programme really helped to make a market work better for the poor? Evaluations need to investigate and take into account a wide range of perspectives and experiences of people living in poverty. This should include both direct beneficiaries (e.g. farmers using new methods introduced with the help of the programme), and indirect beneficiaries (others living in the same communities).

9. Incorporate methods that can identify unexpected impacts. As the name suggests, theory-based evaluation takes the theory of change of an evaluation as its starting point. However, the complexity of market systems means that evaluators should look beyond the results anticipated in the theory of change, and should also probe for negative impacts.

10. Pay special attention to bias when designing the study, and to the issue of validity when interpreting the results. Careful attention to sampling is essential for quantitative surveys, as is a rigorous assessment of whether the results are applicable to the wider population. Qualitative research can provide a rigorous basis for evaluating impact, but there is significant potential for bias. Acknowledging this possibility and thinking systematically about how to address it needs to be done at the planning stage of an evaluation.

While market systems are complex by their nature, evaluations to assess their impact need not be, and it should be possible to take all of the above into account when designing one. The BEAM Evaluation guidance provides further details on these points, and other issues to consider in designing and implementing an evaluation study.

Fionn O’Sullivan, February 2016

Note: a version of this blog also appears on the BEAM Exchange website https://beamexchange.org/