One of the key themes of the 2019 UK Evaluation Society conference was complex systems thinking. Keynote speaker Dr Matt Egan, from the London School of Hygiene and Tropical Medicine, suggested that systems thinking is vitally important to help us understand a complex reality.

For evaluation practice, this entails moving away from ‘traditional evaluations’ of discrete interventions with clear start and end dates and clear causal pathways, towards an appreciation of a messier reality in which interventions are embedded in, and interact with, the systems around them. This resonates clearly with the work of the Inclusive Growth Practice at Itad; clients such as DFID are increasingly asking us to evaluate if and how complex groups of interventions interact to produce systemic impacts.

What is a proper approach to thinking about complex systems?

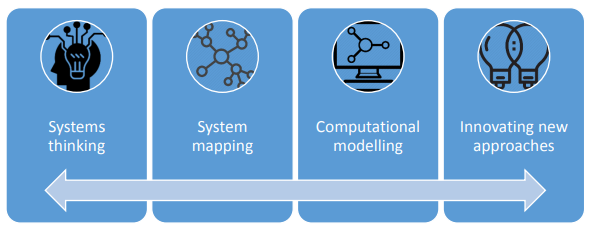

While there is an increasing appreciation that systemic impacts are desirable, there is less consensus on what systems look like and how change in systems can be measured. Dr Egan highlighted that systems are characterised by a number of key attributes. Systems should be thought of as more than a tangled pattern of relationships; instead, a key characteristic is that they produce their own patterns of behaviour over time (and as such are more than the sum of their parts). There are two principal schools of thought around conceptualising and measuring systems, ‘Systems Thinking’ and ‘Complexity Science’, which broadly fit on a continuum from more qualitative (to the left of the below diagram) and more quantitative (to the right):

What can a focus on complex systems add to evaluations?

A key goal of evaluations at the ‘systems thinking’ end of the continuum should be to identify and map multiple influences and in doing so compare different perspectives from a wider range of stakeholders. These evaluations should focus not just on the intervention itself but on how the system influences it. This influence will change over time and as such the goalposts for the evaluation will also shift; this can be used to justify an adaptive evaluation design.

Evaluation designs at the ‘complexity science’ end of the continuum are much less common in the international development space but might be expected to become more common as donors increasingly focus on the interaction of interventions within complex systems. These designs focus on quantitative approaches to modelling systems and causal relationships and testing hypotheses. As such, they often focus on hypothetical interventions (as opposed to systems thinking-evaluations which typically focus on actual interventions) and are used ex-ante to test new interventions designs and combinations of interventions.

Concluding thoughts

Given the increasing focus in international development on measuring systemic change, thinking about systems through the lenses of ‘systems thinking’ and ‘complexity science’ provides a useful starting point to identify and select appropriate evaluation approaches, depending on the task at hand, while also providing an indication of what a ‘good quality’ evaluation in this area should focus on. The tools and approaches associated with complexity science, in particular, is an area for Itad to focus more on in the future.1